The NVIDIA Grace Hopper Superchip is intended as a high-performance computing solution, primarily to meet the demanding requirements of large-scale AI and HPC (High-Performance Computing) applications. The Grace CPU, based on the Arm architecture, is combined with the Hopper GPU, NVIDIA’s next-generation GPU architecture. Because these two components are integrated on a single chip, significant advances in data processing and energy efficiency are possible. The Grace Hopper Superchip is ideal for processing AI model training, scientific simulations, and data analytics. It’s designed to handle enormous datasets and sophisticated algorithms. Let’s dive in.

Grace CPU Superchip Architecture

The Grace CPU Superchip Architecture is an important leap forward in the field of computing. This architecture is intended to meet the growing demand for increased computer performance, particularly in AI and high-performance computing (HPC).

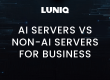

The innovative usage of the NVLink-C2C (chip-to-chip) interface lies at the heart of NVIDIA’s new superchip. NVLink-C2C allows for a high-speed, low-latency connection between the Grace CPU and the Hopper GPU, allowing for more efficient data sharing and communication.

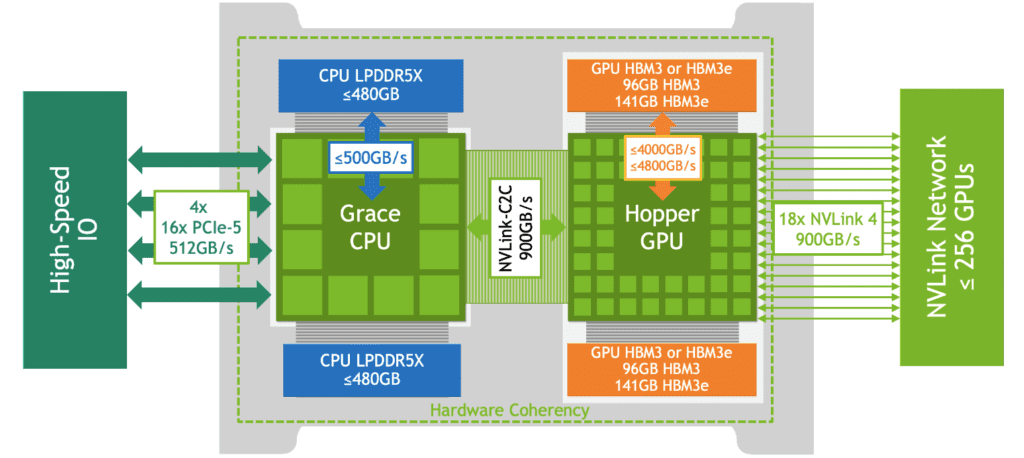

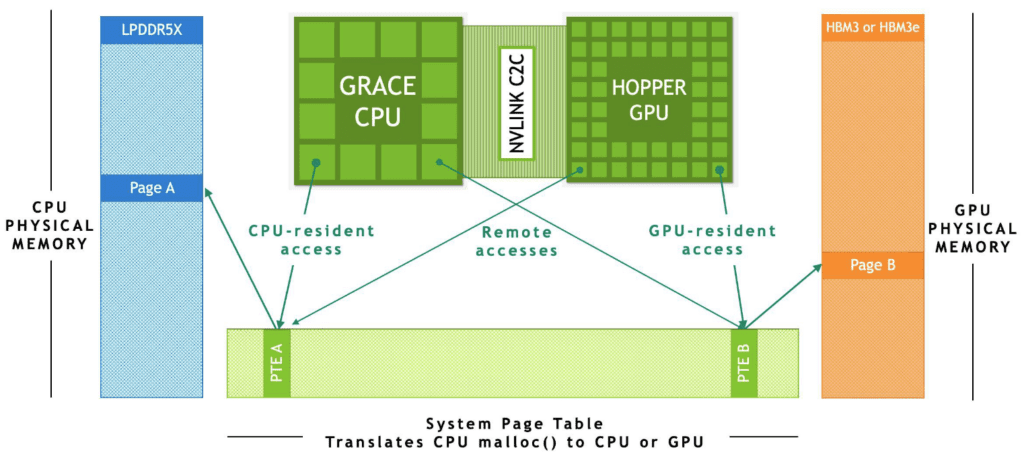

A key feature of the Hopper CPU Architecture is its support for unified memory across the CPU and GPU, which simplifies programming models and improves data sharing efficiency. This unified memory system is facilitated by NVLink.

The Grace CPU is based on the Arm Neoverse N2 core, which is well-known for its energy efficiency and high performance. This core selection enables the Grace CPU to provide the processing capacity required for demanding workloads while preserving energy economy, which is crucial in large-scale data centres.

Grace Hopper architecture also incorporates LPDDR5x memory, which has better bandwidth and lower power consumption than typical DDR4 memory. This memory selection is consistent with the Grace CPU Superchip’s overall design philosophy, which emphasises performance efficiency and energy savings.

Grace Hopper CPU Superchip Architecture is ideal for AI and HPC applications that require rapid data processing and energy economy.

Hopper GPU Architecture

The Hopper architecture is based on the Multi-Instance GPU (MIG) concept, which allows a single GPU to be partitioned into smaller, totally isolated instances. This functionality offers more efficient GPU resource utilisation, particularly in multi-tenant setups where various users or programmes can access separate instances without interfering with each other.

Third-Generation NVLink, a high-speed connection that dramatically enhances data transfer speeds between GPUs and between GPUs and CPUs, is also introduced in the architecture. This improvement is critical for applications requiring fast data flow, such as AI training and high-performance computing jobs.

Another important feature of the Hopper design is its compatibility for cutting-edge memory technologies like as HBM3 (High Bandwidth Memory). HBM3 has greater bandwidth and memory capacity than its predecessors, which is critical for processing massive datasets and sophisticated computations.

The design also includes powerful AI and machine learning features, such as the Transformer Engine. This specialised hardware speeds up procedures typical in transformer-based models, which are becoming more prominent in natural language processing and other AI applications.

The Hopper design also incorporates advancements in ray tracing and graphics rendering. These improvements are especially useful for virtual reality, gaming, and professional visualisation applications that require high-quality graphics and real-time rendering.

NVLink-C2C

NVLink-C2C (Chip-to-Chip) is designed to significantly enhance the data transfer rate between the CPU and the GPU, as well as between multiple GPUs.

In a typical computer system, the CPU and GPU communicate via a standard bus like PCIe (Peripheral Component Interconnect Express). While PCIe is quite capable, it has limitations in bandwidth and latency, especially when dealing with large-scale data processing tasks common in AI, deep learning, and scientific simulations.

NVLink-C2C addresses these limitations by providing a much faster and more efficient communication pathway. Think of PCIe as a two-lane road where data can travel at a certain speed, and NVLink-C2C as a multi-lane highway allowing for much faster and greater volumes of traffic. This high-bandwidth, low-latency interconnect drastically reduces the time it takes for the CPU and GPU to exchange data.

This is particularly important in scenarios where the CPU needs to perform complex computations and then quickly pass data to the GPU for parallel processing (or vice versa). In AI and machine learning, for instance, this rapid exchange of data is crucial for training models efficiently. NVLink-C2C ensures that the CPU and GPU can work together more harmoniously and efficiently, leading to significant performance improvements in compute-intensive tasks.

LPDDRX5 Memory

The NVIDIA Grace Hopper architecture’s LPDDR5X Memory System is a significant development in memory technology, designed for high-performance computing workloads. This system is built around a 16-channel memory subsystem, with each channel measuring 16 bits wide. This design yields a total interface width of 256 bits, which is critical for achieving high data throughput.

The LPDDR5X Memory System, which operates at an 8400 MT/s frequency, provides a significant improvement in bandwidth over its predecessors. This high frequency is critical for enabling quicker data transfer rates, which are critical for applications that require quick access to massive amounts of data, such as AI and machine learning.

The system also includes extensive power management features. The Deep Sleep Mode is especially notable since it allows the memory to enter a low-power state while not in use, considerably lowering power consumption. This is supplemented by Dynamic Voltage and Frequency Scaling (DVFS), which adjusts voltage and frequency dependent on workload, optimising power efficiency even further.

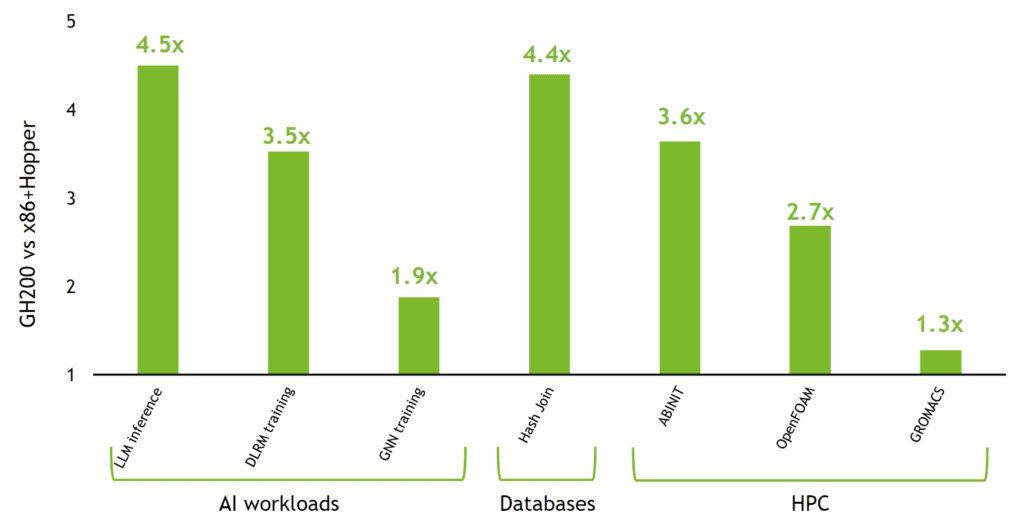

AI Workloads and Performance

The NVIDIA Grace Hopper Superchip architecture improves AI and HPC (High-Performance Computing) performance dramatically, particularly in AI applications. The integration of the Grace CPU and Hopper GPU, which are linked via the high-speed NVLink-C2C connector, is principally responsible for this improvement.

The advanced capabilities of the Hopper GPU significantly improve the architecture’s performance in AI tasks. The Transformer Engine, for example, was created expressly for accelerating transformer-based models, which are common in modern AI workloads. This engine optimises matrix multiplications and decreases processing needs, resulting in faster and more efficient AI model training and inference.

The Grace CPU adds to AI performance through its high memory bandwidth and huge cache, both of which are required for processing the large datasets common in AI applications. This allows data to be accessible and processed fast, minimising bottlenecks in data-intensive processes.

Software Ecosystem

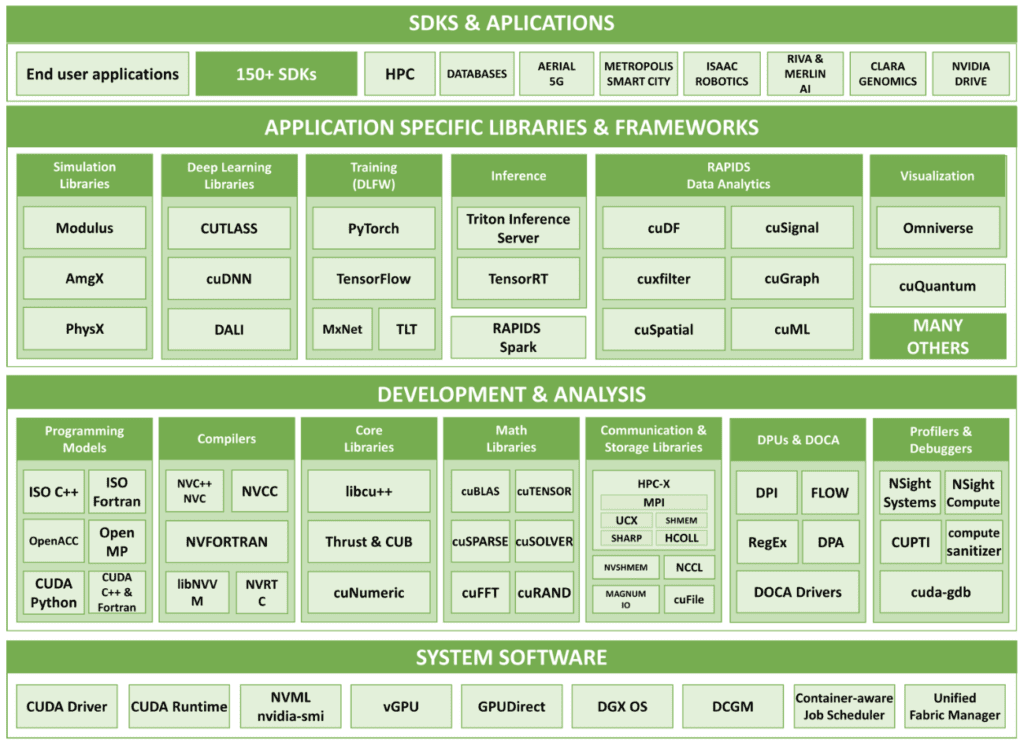

The software ecosystem of the NVIDIA Grace Hopper Superchip is intended to provide complete support for a wide range of applications, with a focus on AI and high-performance computing. It is based on NVIDIA’s CUDA-X libraries, which are specialised for various areas such as AI, data analytics, and scientific computing. These libraries have been optimised to take advantage of the Grace Hopper architecture’s specific hardware capabilities, resulting in efficient performance.

Support for major AI frameworks such as TensorFlow and PyTorch is also included in the ecosystem, allowing developers to easily deploy their AI models on the Grace Hopper Superchip. Another important component is NVIDIA’s AI Enterprise software suite, which provides a scalable and secure platform for AI workloads. This package provides tools for model training, inference, and management, making it easier for enterprises to incorporate artificial intelligence into their operations.

The NVIDIA HPC SDK provides a comprehensive set of compilers, libraries, and tools for developers to fully utilise the Grace Hopper Superchip in high-performance computing applications. This SDK is designed to optimise code for the capabilities of the architecture, assuring maximum performance.

Energy Efficiency

The energy efficiency of the NVIDIA Grace Hopper Superchip is primarily achieved by its innovative design and architecture. The Superchip is constructed using cutting-edge 4nm and 5nm process methods. These smaller process nodes are more energy-efficient because they allow for more transistors in the same area, resulting in higher performance while consuming less power. The Superchip also has a unified memory architecture, which allows the CPU and GPU to share memory. This lowers the need for data duplication and transportation, resulting in energy savings.

In addition to these architectural advantages, the Superchip incorporates dynamic power management algorithms. These algorithms regulate the CPU and GPU power usage based on workload, ensuring that the Superchip performs at maximum energy efficiency in a variety of scenarios.

Use Cases and Applications

NVIDIA’s Grace Hopper Superchip is intended for use in a variety of high-performance computing applications. Its architecture allows it to excel at jobs that need a lot of computational power and data processing. This makes it perfect for use in scientific research, which frequently involves sophisticated simulations and data analysis. In domains such as climate modelling, astrophysics, and molecular dynamics.

Its design is deep learning-optimized, enabling for faster and more accurate model training. This is especially useful in applications requiring speedy and precise data processing, such as natural language processing, picture recognition, and autonomous car technology.

The superchip also finds use in data centres, where it improves speed for cloud computing and virtualization. Its high throughput and low latency are critical in these environments, as it supports a wide range of services ranging from web hosting to complicated database management systems. The Grace Hopper Superchip’s capacity to execute several jobs efficiently at the same time makes it a useful asset in these environments, assuring the seamless and reliable running of data centre services.

References